Full disclosure, these are not proven statistics, they are estimates. Owners of these LLMs very rarely disclose their parameters, energy consumption, water usage, and CO2 Emissions. So, we estimated through calculations of already disclosed info. Under this graph, we show you how we came to our conclusions.

Per single query visualization with real-world equivalents

About this data: The table shows estimated environmental impact metrics for various large language models (LLMs) per query. Models with more parameters typically require more computational resources, resulting in higher energy and water consumption and CO₂ emissions.

Energy comparison: Energy consumption is measured in watt-hours (Wh) per query. For reference, a typical iPhone 16 battery holds about 12 Wh of energy, so a single charge equates to approximately 12 Wh.

Water comparison: Water usage is measured in milliliters (mL) per query. A standard bottle of water contains 500 mL. Water is primarily used for cooling data center equipment during model operation.

CO₂ comparison: CO₂ emissions are measured in grams (g) per query. An average passenger vehicle emits about 0.15 g of CO₂ per second (or 9 g per minute) of driving.

iPhone 16 Charges

Single queries equal this fraction of one full charge

Water Bottles

Portion of a 500mL water bottle

Car Driving

Seconds of driving an average car

Chart may not display properly on mobile devices

Clarification on Query Length:

- User Input: A standard user question or prompt can range from 5 to 10 tokens.

- Model Response: The model’s reply can vary significantly but, for estimation purposes, is assumed to be around 10 to 15 tokens.

Therefore, a combined total of approximately 20 tokens per “query” was used for the calculations. Or, about 30 English words

Parameter Estimations

To calculate energy and water consumption and CO2 emissions, we need the parameter count of each LLM.

Notes:

- Estimation Methods: For models without official parameter disclosures, estimates are based on performance benchmarks, scaling laws, and comparisons with similar models.

- Variability: These estimates may vary due to differences in model architectures, training data, and optimization techniques.

| LLM Model | Developer | Estimated Parameter Count | Estimation Basis |

|---|---|---|---|

| GPT-4.5 | OpenAI | Approximately 1 trillion | While OpenAI has not officially disclosed GPT-4.5’s parameter count, estimates suggest it may have around 1 trillion parameters, considering its performance and scaling from previous models. |

| Claude 3.7 Sonnet | Anthropic | Approximately 1.2 trillion | Anthropic has not released specific details, but based on performance benchmarks and scaling trends, Claude 3.7 Sonnet is estimated to have around 1.2 trillion parameters. |

| Grok-3 | xAI | Approximately 2.5 trillion | Grok-3 is reported to have an exceptionally large parameter count, estimated at around 2.5 trillion parameters, designed for complex reasoning and coding tasks. |

| Gemini 2.0 Flash-Lite | Google DeepMind | Approximately 500 billion | Specific parameter counts are not publicly disclosed, but estimates based on performance suggest around 500 billion parameters. |

| Qwen 2.5-Max | Alibaba | Approximately 600 billion | While Alibaba has not released specific details, estimates based on similar models suggest around 600 billion parameters. |

| DeepSeek R1 | DeepSeek | Approximately 671 billion | DeepSeek R1 is estimated to have 671 billion parameters. |

| Llama 3.1 | Meta AI | 405 billion | Llama 3.1’s top model has 405 billion parameters. |

| Mistral Large 2 | Mistral AI | Approximately 500 billion | Specific details are not publicly disclosed, but estimates suggest around 500 billion parameters. |

| Falcon 180B | Technology Innovation Institute | 180 billion | Falcon 180B is known to have 180 billion parameters. |

Environmental Impact Calculations

Energy Consumption:

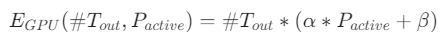

Energy usage scales approximately linearly with the number of parameters. Egologits has done an amazing job at modeling the relationship between LLM parameters and energy consumption. Here’s that super cool equation:

Using this equation, we were able to calculate each LLM’s approximate energy use with their approximate parameter count. Helpful!

Water Usage:

Data centers’ water usage varies, with averages ranging from 0.19 to 1.8 liters per kWh. This info was gathered by TechTarget. For this estimation, a mid-range value of 1.0 liter per kWh is used. This paper also landed on the same conclusion, 1L/kWh.

CO₂ Emissions:

CO₂ emissions depend on the energy source. For estimation purposes, an average of 0.5 kg CO₂ per kWh is assumed.

Real-World Impact

Now, what does this all mean? To some this is just a bunch of numbers, but the real picture is revealed through real-world examples. Let’s say you use AI to write a 500 word essay, how much does that cost?

Notes on Equivalents:

- iPhone 16 Charge: One full charge is about 12 Wh.

- Water Bottle: One standard water bottle is 500 mL.

- Driving: An average car emits about 0.15 g CO₂ per second.

| LLM Model | Energy (Wh/essay) | iPhone 16 Charges (per essay) | Water (mL/essay) | Water Bottle Fraction (per 500 mL) | CO₂ (g/essay) | Driving Time Equivalent (seconds) |

|---|---|---|---|---|---|---|

| GPT-4.5 | 5.10 | 0.43 | 5.10 | 0.010 | 2.55 | 17.0 |

| Claude 3.7 Sonnet | 6.12 | 0.51 | 6.12 | 0.012 | 3.06 | 20.4 |

| Grok-3 | 12.75 | 1.06 | 12.75 | 0.026 | 6.38 | 42.5 |

| Gemini 2.0 Flash-Lite | 2.55 | 0.21 | 2.55 | 0.005 | 1.28 | 8.5 |

| Qwen 2.5-Max | 3.06 | 0.26 | 3.06 | 0.006 | 1.53 | 10.2 |

| DeepSeek RT | 3.40 | 0.28 | 3.40 | 0.007 | 1.70 | 11.3 |

| Llama 3.1 | 2.04 | 0.17 | 2.04 | 0.004 | 1.02 | 6.8 |

| Mistral Large 2 | 2.55 | 0.21 | 2.55 | 0.005 | 1.28 | 8.5 |

| Falcon 180B | 0.92 | 0.08 | 0.92 | 0.002 | 0.46 | 3.1 |

We can see that there’s a large disparity in resource consumption between the different models. An essay with Grok-3 will cost us a trip around the block and your whole phone battery. An essay with Falcon? Your phone dropped 8% and you didn’t even leave the driveway.

Conclusions

- Scale Matters:

Every individual query might have a small, negligible impact, but the cumulative effect is a substantial environmental footprint. This emphasizes the importance of sustainable practices as AI becomes more pervasive in our daily lives.

- Model Efficiency Is Key:

The graph shows a clear variance among the models. Models like Grok‑3, which boast higher parameter counts, naturally consume more energy and emit more CO₂. In contrast, leaner models such as Falcon 180B have a noticeably lower per‐query environmental cost. This difference should encourage both developers and users to consider energy efficiency as a critical factor alongside performance.

- Real-World Comparisons Help Us Understand Impact:

Converting abstract numbers into everyday equivalents—like smartphone charges or seconds of driving—helps us visualize the environmental cost. It turns technical data into a relatable context, making it easier for everyone to grasp the trade-offs involved in powering these powerful language models.

- A Call for Sustainable AI Development:

The insights drawn from these estimates call for a balanced approach in AI development. As we push for more capable models, we must also invest in strategies to reduce energy consumption, improve water efficiency, and lower CO₂ emissions. In doing so, we can ensure that AI continues to advance without an undue burden on our planet.